How Do You Ensure the Quality of AI Systems?, Part 1 - Prepping Data, Ethics and the Law—Identifying the Key Concerns

2023/03/16 Toshiba Clip Team

- AI models can be beyond our comprehension

- Three major challenges with AI based on machine learning

- Toshiba’s guidelines for ensuring the quality of AI systems

Artificial Intelligence (AI) has been a part of our collective vocabulary for a while now, and its technological advances are irreversible. We need to think deeply about these advances, on the assumption that they will change our lives and the structure of society. However, the debate about the place of AI systems in society, and the approaches we need to follow when engineering them, is still young. Most notably, people working in the field around the world are exploring and refining an approach called Quality Assurance of AI Systems, and also asking whether or not AI is truly capable of delivering at the level it claims to achieve—for instance, AI-based face-recognition systems have erroneously labeled people as animals. As a leading company with over 50 years of experience in AI research and development, Toshiba is focused on this problem. To learn more, we spoke with Mr. Kei Kureishi from Toshiba’s Corporate Software Engineering & Technology Center, who is involved in an AI system quality assurance project.

What exactly is AI?

AI is about giving computers the ability to mimic or reproduce human cognitive capabilities. There are various flavors of AI. The one we will focus on here is AI that utilizes machine learning, a mechanism that allows AI to access a vast amount of data, learn from it for itself and extract latent patterns in the data, and then build a specific model. A popular method of machine learning is known as deep learning.

The first AI boom occurred over half a century ago. We are now in the third boom. This time around, however, it’s more than a mere boom. A major reason why AI has attracted so much attention this time is that AlphaGo, an AI system developed by Google, defeated a professional human Go player in 2015. Kureishi explained why this is seen as important.

Kei Kureishi, Expert, Software Engineering Technology Department,

Corporate Software Engineering & Technology Center, Toshiba Corporation

“Before AlphaGo, IBM’s chess computer Deep Blue had defeated the chess world champion. However, Go is magnitudes more complex than chess. People at the time thought that it would be impossible for AI to defeat a human in Go. So why was AlphaGo able to defeat a human? AlphaGo essentially learned how to play Go by itself, the outcome of its exposure to tens of millions of iterations of game data, a huge volume. Once an AI system reaches this dimension, even its developers will have a hard time understanding its thought patterns.”

Although AI was developed by people, it has quickly reached a level beyond human comprehension. This is the reality of AI today.

Even humans who develop AI models do not understand them

When an AI system is being trained through deep learning, even humans cannot predict how it will use the input data to generate output. Thus, the idea of incorporating this AI into social infrastructure and other critical systems raises the question of whether it will really work as intended. The key is figuring out what we need to do to use AI systems with confidence. This challenge is called Quality Assurance of AI Systems, and it is a subject discussed by developers around the world who are attempting to implement AI in society, including engineers at Toshiba.

“At Toshiba, we launched the AI Quality Assurance Project to discuss this issue,” says Kureishi. “For quite some time, we have been discussing how to assure quality in the development of AI-equipped systems. We initiated the project in 2019 to develop quality guidelines, as we were receiving an increasing number of inquiries about quality assurance from outside the company.”

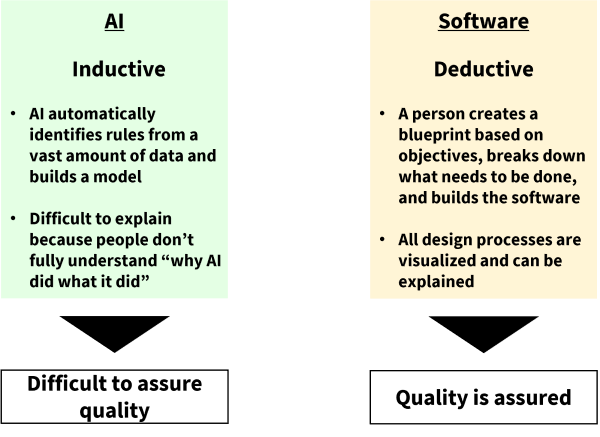

Kureishi was originally a researcher specializing in software engineering—methodology for efficient development of high-quality software. How did he come to take on this kind of project? The answer is due to the unique nature of AI. Typically, software is developed from a blueprint, using a deductive model, but machine learning AI, including deep learning, is inductive. This difference led him to the issue of how to ensure AI’s performance quality when he was advising other software engineers on software quality, and on how to make it work more efficiently in a system that incorporates AI with other software.

In deductive software development, a person first creates a blueprint, breaks down what needs to be done to achieve the objectives set forth in it, then codes the software accordingly and checks it for bugs. All of these processes can be visualized and explained. In other words, software quality is assured. However, in the inductive method, AI identifies rules autonomously from a vast amount of data and constructs models based on them, which does not allow people to fully understand why the AI did what it did. In short, since people cannot explain the AI’s actions, or predict what kind of output it will produce, it is difficult to ensure quality. Realizing this fundamental difference is critical when considering quality assurance for AI. Because Kureishi understands this difference, he became deeply involved in the project when system and software engineers who wanted to make use of AI came to him with questions about assessing quality.

Approach to quality in inductive AI vs. in deductive software

“Let’s say you have an AI that recognizes images of cats, achieved by extracting the common elements from a vast assortment of cat images. Shown an image of a cat and a dog, it can instantly and accurately determine which one is the cat. However, what exactly did the AI extract from those images as features common to cats, and how did it do it? People can’t understand this decision-making process.”

What are the three factors that complicate quality assurance for AI?

There are three issues that must be understood when thinking about AI quality: the black box, bias, and security.

The black box issue refers to our inability to shed light on the inductive process used by AI learning algorithms. Kureishi expressed his frustration with this issue as follows:

“That’s exactly the topic we are trying to figure out right now: Is the AI model we developed really, actually perfect? We have to consider whether it is truly all right to offer the model to society as it is. In response to this question, engineers tend to propose using a larger volume of data or increasing the number of learning layers as measures to increase accuracy and assure quality. However, I don’t think that fundamentally solves the issue. Our obligation is not only to achieve high performance, but also to explain just how much output is being done correctly. In that sense, solving the black box issue is not so simple.”

The bias issue occurs when the training data itself is biased. For example, many people envision a man when they hear the word “doctor” and conversely envision a woman when they hear the word “nurse.” A human cognitive bias is at play here. Although there is essentially no relationship between gender and professional categories such as doctors and nurses, in reality, a gender bias does exist. If most of the data collected showed male doctors and female nurses, how would the AI determine the profession of a female doctor in a white coat? Perhaps it would automatically label the doctor as a “nurse.” This is the bias issue.

“There is concerned discussion, for example, of cases in which image recognition systems could misidentify human faces as those of animals. Thus, the bias issue can evolve into an ethical issue. These problems arise because society has biases in the first place, and the data collected reflects those biases. It’s not easy to solve because the roots of the issue run so deep.”

The third issue, security, is also troubling. AI has the weak point of being surprisingly easily misled. For example, if an image recognition AI used in a self-driving car detects a symbol consisting of a horizontal white line against a red circular background, it may judge it to be a “no-entry” road sign. Such symbols, however, can also appear in generic designs. If a self-driving car were to stop every time it recognized a similar symbol, it could cause a collision.

“Any symbols that look similar to one another to a person can be easily misrecognized by AI. The trouble with this, again, is that people cannot understand why the AI makes the decisions it does, or the process that led it to make those decisions. We also have to consider the security aspect of AI, as attacks could take advantage of this vulnerability to intentionally mislead AI systems.”

Guidelines for what to focus on in an AI-equipped system

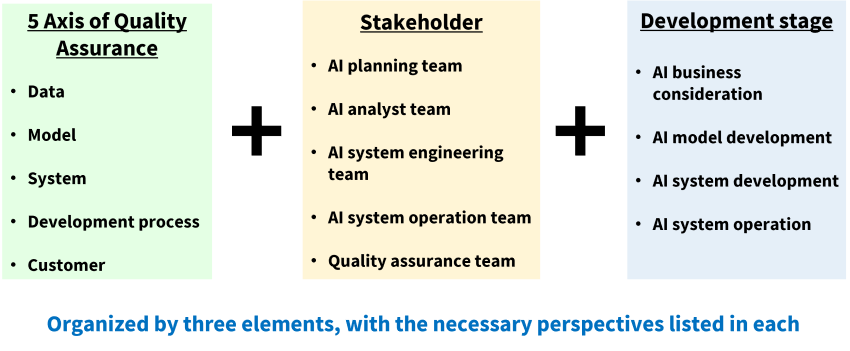

As we have seen, AI at present is far from perfect. However, as a practical matter, AI is already widely deployed in society. Quality assurance of AI systems is thus a concern. This is why Toshiba has compiled a set of basic guidelines, Quality Assurance Guidelines for AI Systems. The guidelines cover the essentials of developing an AI model and incorporating it into a system, such as what to focus on, points that require attention, what to consider from the quality aspect, and how to advance the development process.

“These comprehensive guidelines encompass data sufficiency and ethics, legal challenges, communication with clients, attitudes toward risk, and more. They include checkpoints from diverse perspectives because so many stakeholders are involved besides the AI system developers, including the sales representatives who work directly with clients and AI system operators.

“The more AI is used in society, the more quality assurance is called into question. For a company like Toshiba involved in social infrastructure systems, it is even more important to have appropriate guidelines. We do not think of the guidelines as a finished product. We expect to continually update them, working with our clients and with consideration for society’s evolving requirements. Eventually, I believe that quality assurance will become the norm for AI, and that AI will be more fully utilized to support society. This is my goal.”

In addition to the aforementioned guidelines, Kureishi and his colleagues have laid out a detailed process for AI development. To support this process, they are also developing techniques to evaluate and visualize the quality of AI systems. These unique initiatives at Toshiba will be discussed in detail in Part 2.

Toshiba’s approach in the Quality Assurance Guidelines for AI Systems

*Product names mentioned herein may be trademarks of their respective companies.

![]()

Related Links

*This section contains links to websites operated by companies and organizations other than Toshiba Corporation.

TOSHIBA REVIEW: SCIENCE AND TECHNOLOGY HIGHLIGHTS 2022; Research and Development