Instant Voice-to-Text! Transforming Work Styles with the Power of AI

2019/12/11 Toshiba Clip Team

We are fast approaching a time where more people can live beyond a hundred years old. At the same time, however, there are labor shortages due to low birthrates and an aging population. RPA (Robotics Process Automation)—using robots to automate work processes—has been touted as a possible way to both resolve these labor shortages and increase productivity by transforming the way we work. RPA has been introduced in finance and other fields, producing great results in automating document creation and data entry tasks.

Nevertheless, many companies still need to carry out tasks such as recording minutes of meetings and transcribing speeches. While AI and software that automatically converts speech to text are already available on the market, converting speech to text accurately still needs to be done manually.

How can we solve this issue and help create a society that is easy to work in? Toshiba provides an answer with its newly-developed speech recognition AI.

We asked Mr. Ashikawa and Mr. Fujimura of the Toshiba Corporate R&D Center, which developed AI, to tell us more about the history of speech recognition using AI and the breakthroughs they made during development.

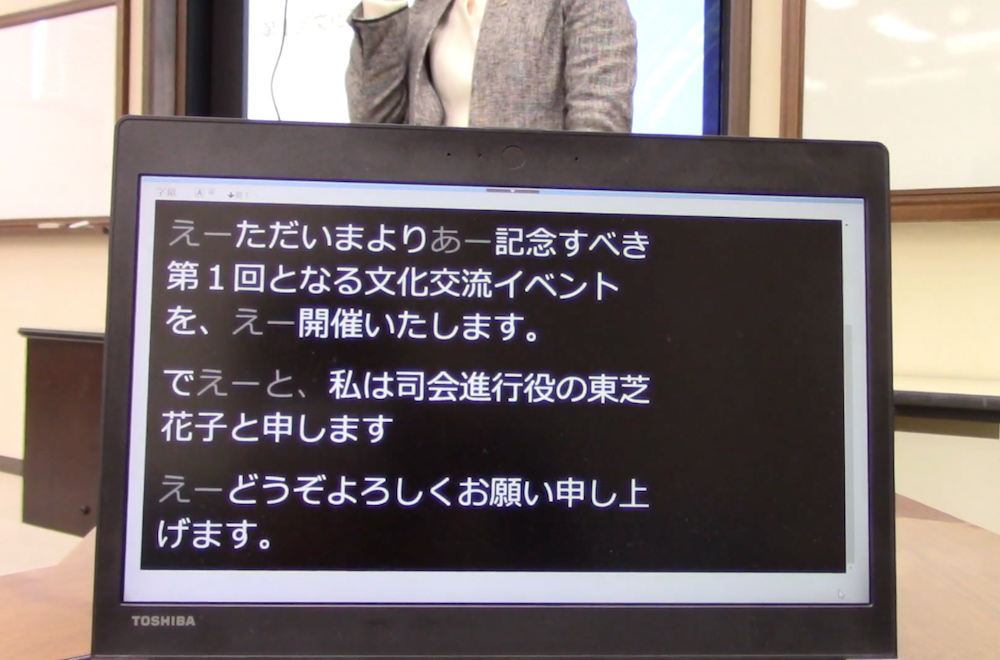

Smooth speech transcription with a fast, easily readable display

Toshiba has a history of working on media intelligence, a field which makes use of human voices and images that have undergone information processing. The foundation the company has cultivated in the field over many years plays a big role in the creation of this voice recognition AI.

Toshiba first began developing AI in 2015. At the time, there was increasing momentum around the world in the field of information accessibility, which aims to create environments that enable people that are deaf and hard of hearing to access and input information. Toshiba has started “Universal Design (UD) Advisor System” since 2007 to enable employees with disabilities to participate in product development. The company believes in promoting diversity and inclusion in the workplace and develops UD-friendly products and services through the years.

“When we interviewed the hearing-impaired people in UD Advisor System, we found out that they wanted to participate in meetings and lectures in real time, and not just read the transcripts provided subsequently. So we tried to provide a function that would automatically display easy-to-read subtitles in real time. To assist the hearing-impaired people in collecting and providing information, we need to do two things: expand information accessibility for the hearing-impaired, and increase productivity. The development of speech recognition AI started from these two points in mind.” (Ashikawa)

Taira Ashikawa, Head of Research, Media AI Laboratory, Toshiba Corporate R&D Center

The technology behind the accuracy in speech recognition

When you describe speech from people’s conversations during meetings and lectures, you will end up with a text that is hard to read. Anyone who has ever transcribed speeches can tell you that. There is a lot of unnecessary content that gets in the way of getting information such as meaningless filler words like “Uh,” and “Umm” and expressions of agreement that add nothing to the content.

The speech recognition AI Toshiba developed is able to recognize speech with high accuracy and detect fillers and hesitation markers as well. This is an essential function when it comes to increasing productivity. Algorithms form the core of AI, and the development team explored a variety of approaches to increase accuracy.

“At first we hit a wall because the level of accuracy of recognition just wouldn’t increase no matter what we did. Our primary goal was to provide users with something that they could use conveniently. By using the increasingly popular model known as LSTM (*1) as well as CTC learning (*2), we tried to teach AI about speech peculiarities such as fillers and hesitation markers that are exclusive to human beings.” (Fujimura)

(*1) LSTM (Long Short-term Memory): one of the developed forms of RNN (Recurrent Neural Network), which has a recursive structure in a hidden layer. It is able to learn long-term dependency relationships which are difficult for conventional RNNs to do.

(*2) CTC (Connectionist Temporal Classification): A method for training RNN to solve problems where sequence lengths differ during input by introducing null characters and adjusting loss functions.

Hiroshi Fujimura, Lead researcher, Media AI Laboratory, Toshiba Corporate R&D Center

Up until now, speech recognition has worked by analyzing sound wave patterns and parsing them by identifying that this part is “a,” this other part is “i” and so on. However, fillers and hesitation markers have an endless variety of patterns, and it would take a long time to learn about them one by one.

“We used LSTM to capture information such as ‘this is what fillers are like,’ ‘this is what it sounds like when someone hesitates over a word,’ as a statistical model and then used CTC learning to make the AI learn it as a model. Through that, the AI became capable of detecting the countless patterns of fillers and hesitation markers as well.

There is still plenty of room for improvement in development and technology to achieve a fully accurate speech recognition offering. Our speech recognition AI can recognize speech in Japanese, English and Chinese for now. We strive to develop an environment where speakers of different languages will be able to enjoy a smooth conversation with one another. When we develop AI, we dream of taking something like that, which you only see in futuristic science fiction or comic books, and making it a reality.” (Fujimura)

This video was released on March 14, 2019.

This is how the AI evolved into speech recognition AI with superior accuracy. When the development team used lectures as an opportunity for verification testing, the AI achieved an average speech recognition ratio of 85%. That means it was able to recognize the contents of speech above a certain level without editing or advance learning. Now that they have raised the accuracy of the speech recognition, they are considering applying it to the communication AI known as RECAIUS™.

They developed applications where a representative affair is a real-time subtitle display function for the hearing-impaired people. They harness AI to display speech clearly with fillers and hesitation markers reflected in faint, non-obtrusive subtitles. This was a user-friendly specification introduced following detailed discussions with users.

Photo (automatic speech subtitling system (left) and image of displayed subtitles (right))

“As far as we’re concerned, filler words like “umm” and “uhh” just get in the way. However, what the hearing-impaired people really want is to get as much information as possible. When they read the subtitles while following the movements of the speaker’s lips, they get stressed when fillers and hesitation markers are cut out because they feel that the speaker is saying something that isn’t being reflected in the text.

So we decided to leave the fillers and hesitation markers in the subtitles but is displayed faintly to make the text easier to read. However, when we record them as transcribed documents, we remove the filler and hesitation markers. That way, we get brief and concise documents.” (Ashikawa)

AI shows its true worth in manufacturing as well.

In March 2019, Toshiba collaborated with DWANGO Co., Ltd. and held a live broadcast of the 81st National Convention of the Information Processing Society of Japan on video website “niconico”. Subtitled videos were distributed online in real time. They are planning to deploy it not only for office tasks but for use in manufacturing settings as well.

“It’s rare to see speech recognition being used as a service in offices today. So it would be ideal for us if users would trust our product and use it, and if it could become something they used in everyday business without being conscious that it’s a speech recognition AI. For example, the words we are speaking right now could become a text polished enough to be used as a business document, with the speakers clearly identified to show who said what. We hope to create a speech recognition AI that is handy and reliable.” (Ashikawa)

“The use of speech recognition hasn’t been applied in manufacturing sites. However there is a need for hands-free voice collection and recording in factories during maintenance and inspections. So I think there’s room for this speech recognition AI to be adopted there as well.

We hope to use our knowledge and know-how about manufacturing facilities to seamlessly integrate speech recognition into their operations. We can do that because we have spent a long time developing speech recognition AI and accumulated knowledge about manufacturing and infrastructure settings. ’Why does Toshiba work on speech recognition?’ I think this will provide one of the answers to that fundamental question.” (Fujimura)

With the numerous potential applications and benefits, there is no doubt that this speech recognition software will be making its presence increasingly felt in more offices and manufacturing sites in the near future.

![]()