Hisashige Tanaka, one of Toshiba’s founders, was famously known as “karakuri giemon,” the genius of mechanical things. His automata, the forerunners of robots, performed complex movements—like the renowned “Yumihikidoji,” a boy archer who fits an arrow to his bow and fires it at a target. This inventiveness has long been the lifeblood of Toshiba, and perhaps explains the company’s interest in creating new robots over its long history.

In karakuri giemon’s day, automata were a source of awe and entertainment, but today’s robots address the needs of society. They need to be able to do something, to provide solutions.

We are now 20 years deep in the 21st century. What kind of “karakuri,” or creative mechanics, has Toshiba prepared to answer to modern society’s various needs?

Highly-intelligent robots and their place in the world

One societal challenge in Japan is labor shortage. The working population is shrinking, and in recent years, the manpower shortage has become a serious social issue. Though many industries are facing similar problems, the logistics industry has been hit especially hard. Terms like “logistics collapse” bring to mind society-breaking scenarios, and it’s clear that this is a problem that must be addressed as soon as feasibly possible.

Toshiba has developed a variety of robots to address the needs of the manufacturing, distribution, and logistics industries. In 1967, more than half a century ago, Toshiba developed an automatic postal code reading and sorting device—the first of its kind in the world. This machine, timed for the postal code system that would be implemented in Japan the following year, mechanized the pre-sorting process that had been done by hand for years, and helped support Japan’s development during the country’s high economic growth period. Toshiba boasts a high market share in this area to this day.

The world’s first automatic handwritten postal code reading and sorting device, announced in 1967

This postal code reading and sorting device mechanized a process that replaced the need for people to look at delivery addresses, and sort the mail manually. And in doing so, it improved overall efficiency. The machine was also much faster than the human labor, and could operate with minimal downtime. However, in terms of the overall work that went into delivering people’s mail— getting the mail to the right address, human intervention was still necessary.

Robots have been implemented in similar ways in the manufacturing, distribution, and logistics industries to automate various processes. So why are we still seeing talk about the “logistics collapse”? The answer is that this particular kind of “logistics collapse” is based squarely on the growing labor shortage. In other words, to solve this problem, we need large-scale automation of processes we weren’t able to automate in the past—automation of processes that we’d previously believed had to be conducted by human beings.

What happens when robots take over human work?

If there’s one thing that industrial robots have been good at, it’s repeating the same process over and over again. What they’re less agile to alter what they do based on different circumstances and environments. This is because robots didn’t have the same abilities that humans have. Though they could operate within a certain defined parameter, they are not able to choose the most efficient method to do something in the face of a constantly-growing set of scenarios. They couldn’t deal with irregular work.

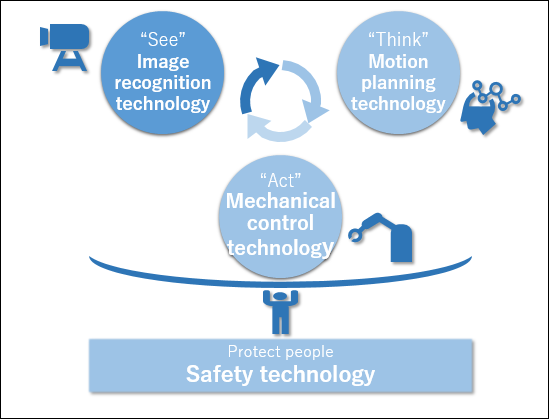

In order to get robots to perform this so-called irregular work, we’d have to create an intelligent robot—one that could “see,” “think,” and “act”.

In more concrete terms, the robot would have to “see” via image recognition technologies, “think” via motion planning technologies, and “act” via control technologies.

These robots will also be working more closely with human beings than ever before, and as such will require that all of their technologies be supported by safety technologies to protect the human beings around them.

Human beings are constantly “reading” their surrounding environment through their senses. To create robots that have the same kind of situational awareness, we’d need very high-level image recognition technologies.

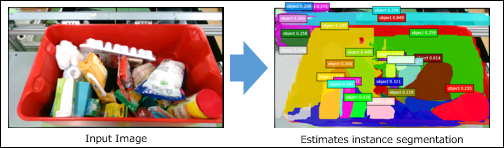

For example, if you wanted a robot to be able to pick up a particular box out of a messy stack among boxes of different sizes, you’d need to equip it with instance segmentation technology, which would allow it to accurately discern what kind of objects there are and how they’re placed. Toshiba boasts the world’s highest level of estimated accuracy in instance segmentation technology1.

Toshiba achieved the world’s highest level of estimated accuracy with regards to instance segmentation technology

1: V. Pham et al. “BiSeg: Simultaneous Instance Segmentation and Semantic Segmentation with Fully Convolutional Networks”. The Proceedings of the British Machine Vision Conference 2017

With this image recognition technology, the robot acquires the information it needs to “act,” to utilize its control technologies.

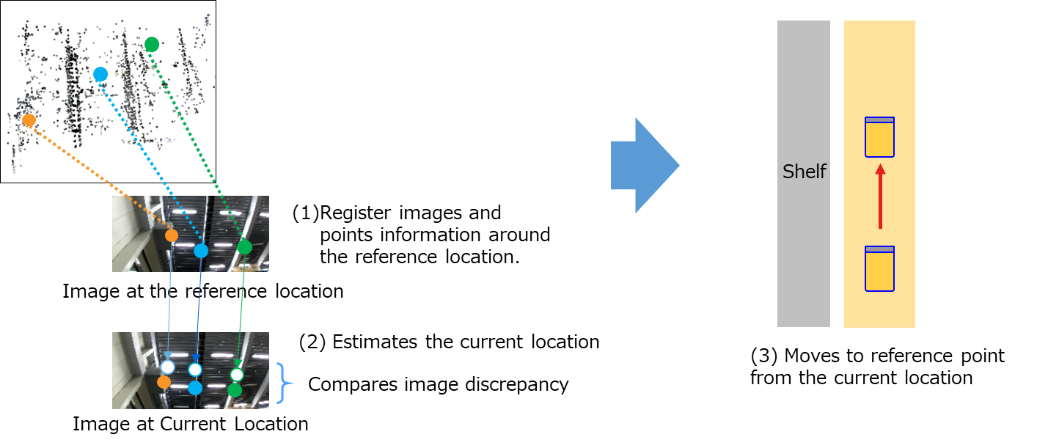

Robots thus far have “traced” (recognized) markers drawn on rails or on the ground to move accurately. This image recognition technology, however, would allow robots to compare their current position against a standard base position, allowing them to estimate their own location and move freely and accurately even in areas without rails or markers. This is an essential function in terms of the robot being able to figure out the optimal path to a certain place on its own. You could say, in that sense, the image recognition technology is trying to do for robots what eyes do for human beings.

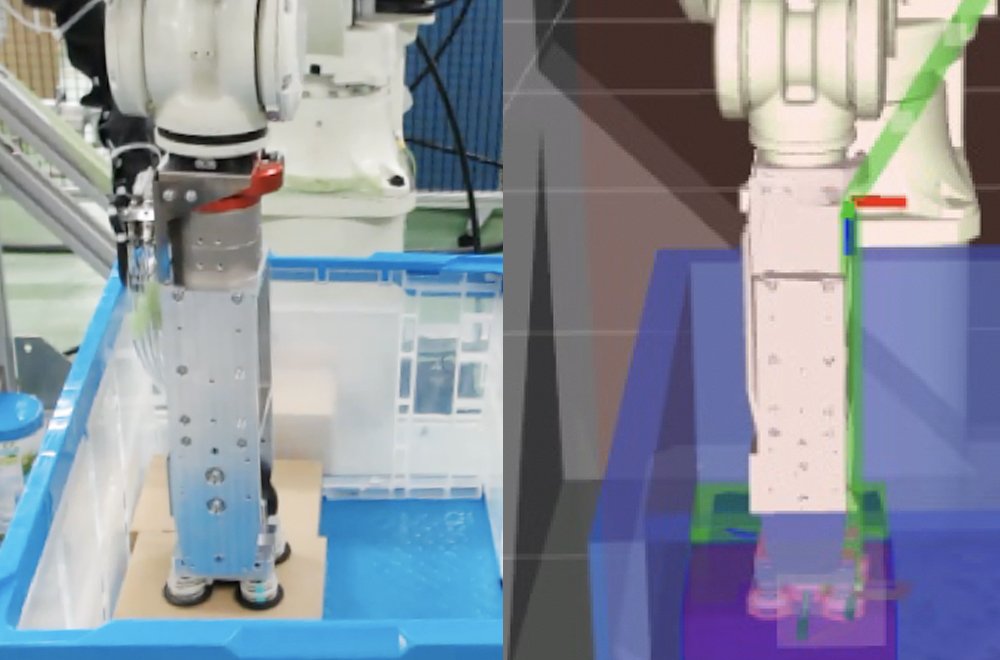

Robots can estimate their own location using image recognition technology; the images they “see” are used to estimate their own location, thereby eliminating the need for rails or markers

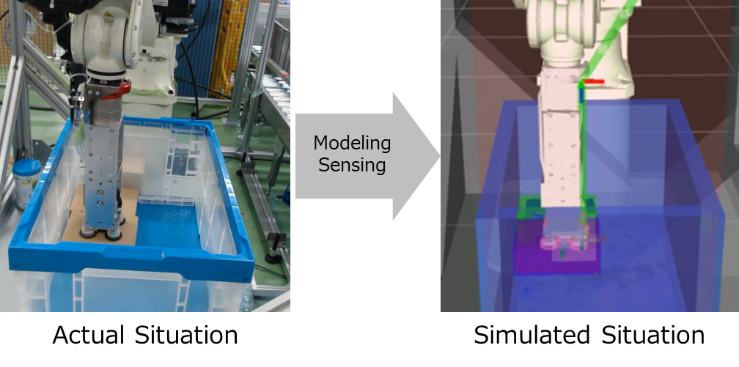

The goal with motion planning technologies is for robots to use the information they gained through the image recognition technology to “think” and later “act” for themselves. When we reach to take something out of a box, we think about where the object is, how big it is, how heavy it is, and how we could maneuver our hands so that we could pick it up without our hands hitting the other objects or the inside of the box.

Similarly, there has to be a way for robots to plan with regards to their movements—how they should move the robot arm, whether they could pick up the object without damaging other things. Until now, these decisions were made for them by human beings. With motion planning technology, however, the goal is to have robots “think” for themselves using information they’ve acquired, and make plans on their own. For a robot to create an ideal motion plan, they would need to take the information they acquired from the image recognition technology, run a series of simulations through computer-simulated models, and create what it would consider to be the best plan.

Once the motion plan has been created, the next step would be to “act” on it. For this, they’d need control technologies. In humans, this would be the ability required to move in skillful ways.

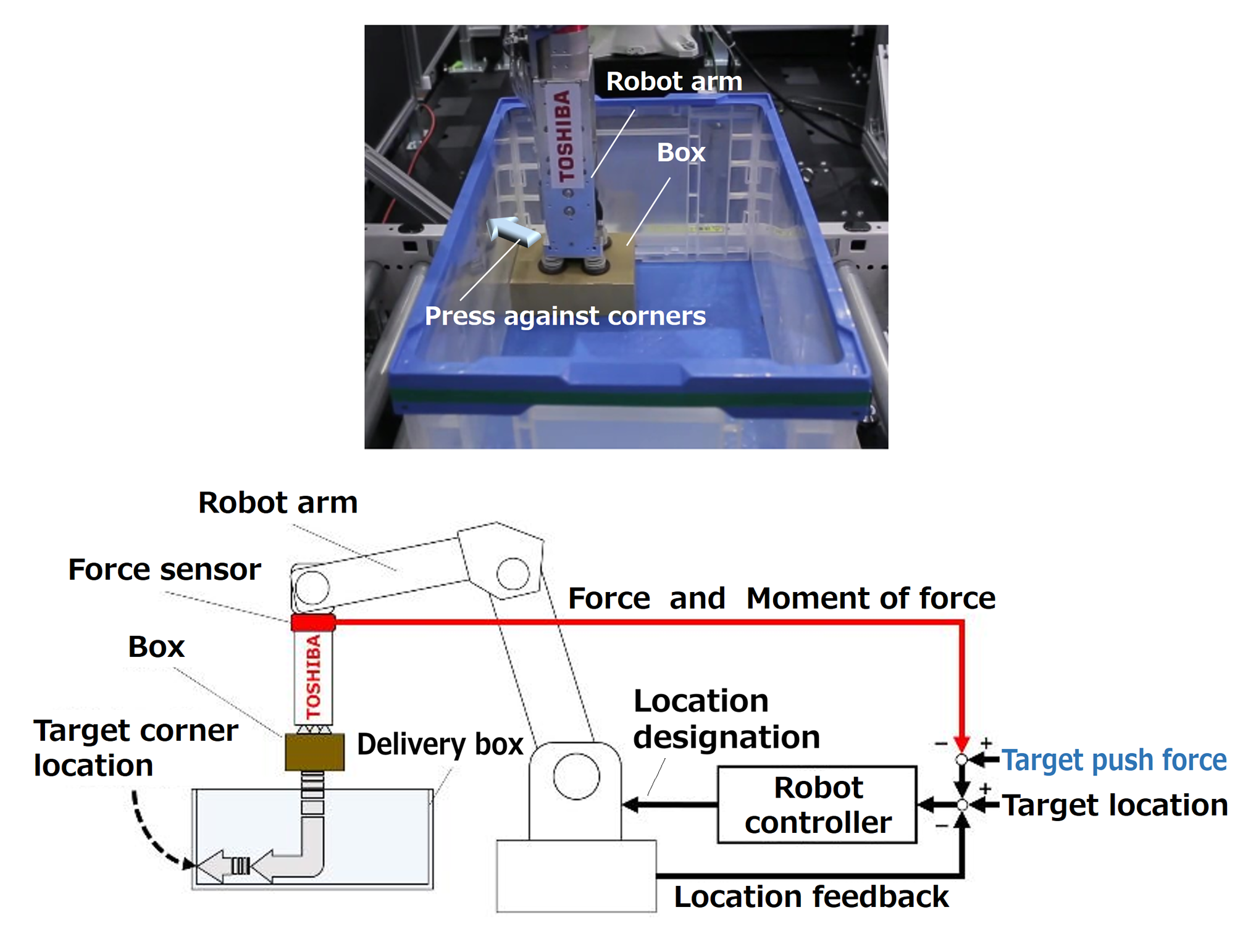

Here at Toshiba, we believe that a robot arm, for example, would need to do more than move exactly as prescribed in the simulation to be considered skillful.

For instance, when putting boxes in a container, we as human beings would tend to place the boxes near the edge of the container. To do that, we check to see where the edge of the container is, then place the box somewhere in that range. Then, we place the box towards the inside “wall” of the container, and relax our hands only after we feel the box make contact with the wall, and we know that it’s now been placed at the edge of the container. This kind of work, which human beings do naturally, is what the robot arm would need the technology to do to be considered skillful.

In other words, to move skillfully, they would need to be able to detect contact between the box and the wall through the force sensor attached to the robot arm.

The robot arm’s force sensor, which detects contact between boxes and walls, allows it to place boxes at the edge of a container, as a human being would.

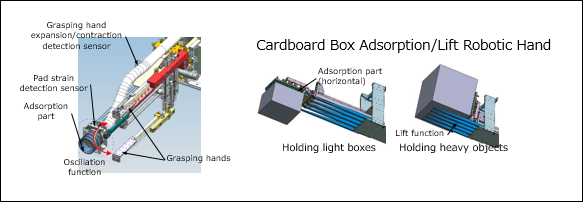

We also have to remember that boxes come in all different shapes and sizes. They may be stacked in a messy pile, or they may be tilted one way or another. Some are lighter and heavier than others. These differences can be identified and easily handled for human beings—we know how to hold something that’s tilted, or how our posture should be when we lift something heavy. And so at Toshiba, we developed a robot hand with what’s called hybrid grip functionality, a feature that allows the hand to hold a variety of boxes in the skillful way that a human would.

Composite grip functionality allows the robot to hold objects of different shapes, sizes, and weights

Safety and Robots?

The development of highly-intelligent robots—robots that could perform these kinds of skillful movements—came about because the labor shortage, like we mentioned before, necessitated the use of robots in work processes that we previously thought had to be handled by human beings. But there’s a major challenge when it comes to this kind of human-to-robot replacement. The problem is how to guarantee safety.

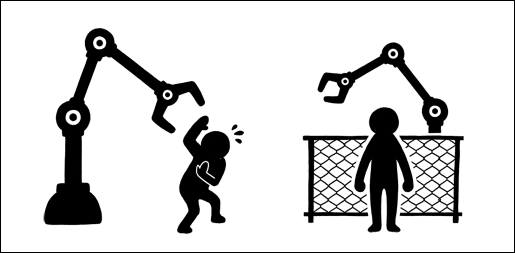

Having human beings and robots working closely together increases the risk of accidents; strict management—for example keeping robot and human work areas separate—will be required to keep people safe.

In the future, robots will be taking over some of the work that used to be done by humans—in some cases human beings standing next to each other. People will be working in closer proximity with robots than ever before. Human-to-human contact poses less danger. Contact with a robot, however, could lead to a major accident. That’s why we need safety technologies that adhere to guidelines such as the ISO13849-1 to keep human beings safe from robots.

Safety technologies are guided by basic principles. One of those is the principle of separation. This is a principle that encourages separation, or a lack of overlap, between robot and human work areas. But as more and more robots come to replace work previously conducted by human beings, some overlap would seem inevitable.

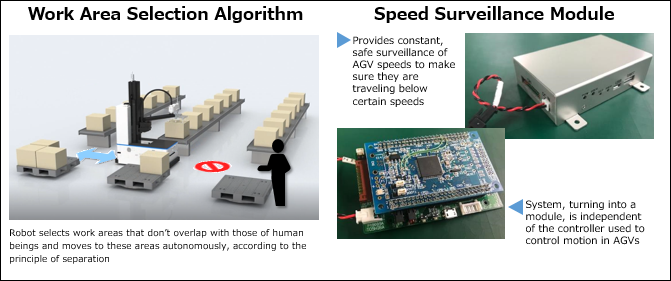

At Toshiba, we used a work area selection algorithm to develop a system where robots select work areas that don’t overlap with those of human beings, and move to these areas autonomously, to keep human beings safe.

We’ve also installed an independent speed surveillance module on our AGVs (automated guided vehicles), in addition to the controller used to control motion, to maintain constant objective surveillance of the vehicles and make sure they’re traveling below certain speeds.

Toshiba believes that all of the technologies that make up a robot should be based around the safety of human beings. As such, we believe the very first priority should be removing the possibility of these kinds of incidents from the very first stage of development.

![]()